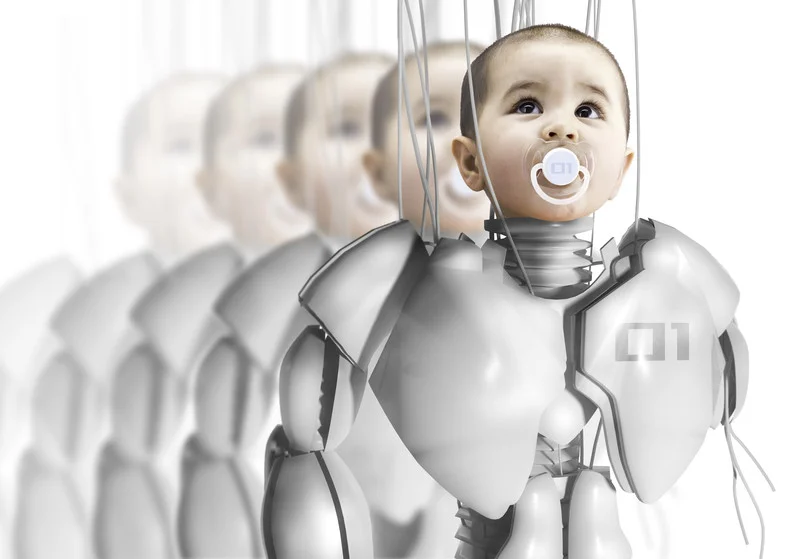

The term ‘designer babies’ has been bandied around for some years now, and refers to babies whose genetic make-up has been selected or altered. This could be to eradicate a disease or defect, or it could be to ensure that particular genes are present. The idea has been discussed in science for many years and in science fiction, the concept has been around for much, much longer. The issue, however, is extremely controversial and as science races to meet science-fiction, the debate is becoming a serious one.

Pre-Implantation Genetic Diagnosis

Actually, the concept of ‘designer babies’ is not that far-fetched and already, doctors and scientists use some form of gene-selection during in-vitro fertilization (IVF) treatments. Pre-implantation genetic diagnosis, or PGD, refers to the practice of screening IVF embryos both for disease and for gender selection. Using this process, scientists can remove the defective mitochondria (the ‘powerhouse’ of cells) from an embryo and replace it with healthy mitochondria from a donor egg, and in this way they can effectively ‘design’ babies without certain diseases. Of course, the process doesn’t work for all diseases, and they can actually use this process for non-medical preferences too, such as the gender of the resulting child. Both forms of PGD are currently legal in the US, although the American Congress of Obstetricians and Gynecologists frowns upon the latter use, arguing that by allowing parents to choose the gender of their child, we run the risk of increasing sex discrimination[1].

DNA Editing

There is more to come though. Dr. Tony Perry of the University of Bath in the UK was one of the first scientists to clone mice and pigs, and he claims that more in-depth DNA editing is on its way. It won’t be long, he says, until we can pick and choose which parts of our baby’s DNA that we want to cut out and potentially replace with new pieces of gene-code[2]. In fact, it’s already begun. Earlier this year, scientists in China took discarded IVF embryos and began experiments to correct the abnormal gene that causes the blood disorder beta thalassemia[3]. Even though these embryos were due to be destroyed, the experiments incited much controversy. Whilst few would argue against potential disease eradication, this technique could be used to alter healthy genes too and the real question is how far would be too far?